OpenAI marked the top of its “12 Days of OpenAI” bulletins with the introduction of two superior reasoning fashions: o3 and o3 mini.

These fashions succeed the sooner o1 Reigning mannequin launched earlier this yr. Curiously, OpenAI dropped the “o2” to keep away from potential battle or confusion with the British telecom firm O2.

The o3 Mannequin: Setting New Requirements in Reasoning and Intelligence

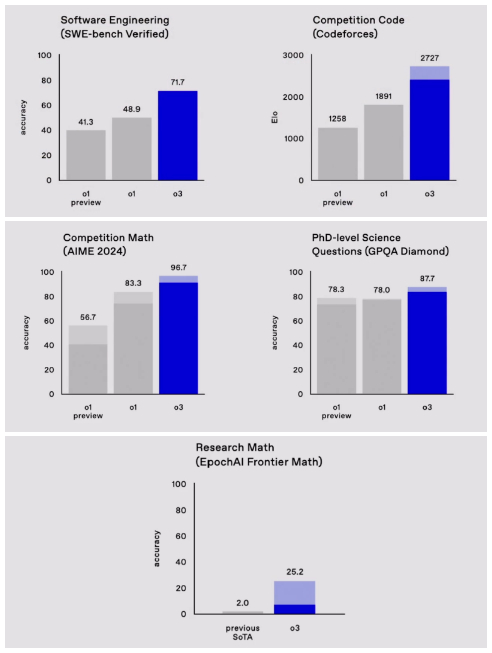

The o3 mannequin units a brand new commonplace for reasoning and intelligence, surpassing its predecessors in a number of domains:

- Coding: SWE-Bench’s licensed coding exams improved by 22.8% in comparison with o1.

- Arithmetic: Handed the AIME 2024 examination with a rating of round 96.7%, lacking just one query.

- Normal Science: 87.7% safe on GPQA Diamond, which assesses expert-level science issues.

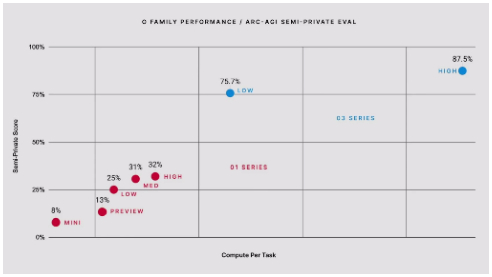

- ARC-AGI Benchmark: Breaks the ARC-AGI benchmark’s five-year unbeaten streak with a rating of 87.5% below excessive computing settings, surpassing the human-like threshold of 85%.

The ARC-AGI benchmark assesses basic intelligence by testing a mannequin’s means to unravel new issues with out counting on memorized patterns. With this success, OpenAI describes the o3 mannequin as an essential step in the direction of Synthetic Normal Intelligence (AGI).

o3 Mini: A compact, cost-effective various

The o3 Mini affords a distilled model of the o3, optimized for efficiency and affordability:

- Designed for quick coding and efficiency.

- Options three compute settings: Low, Medium, and Excessive.

- Outperforms the bigger o1 mannequin in medium compute settings, providing decrease value and latency.

Deliberate alignment for higher security

OpenAI introduces Deliberate Alignment, a brand new coaching paradigm that goals to enhance safety by incorporating structured reasoning aligned with human-written safety requirements. Key facets embrace:

- Fashions clearly have interaction in chain-of-thought (CoT) reasoning in keeping with OpenAI insurance policies.

- Eliminates the necessity for human-labeled CoT knowledge, enhancing adherence to security requirements.

- Allows inferring context-sensitive and safe responses in comparison with earlier approaches equivalent to RLHF and constitutional AI.

Coaching and procedures

Deliberate alignment employs each process-based and outcome-based supervision:

- Coaching begins with help duties, excluding safety-specific knowledge.

- A dataset of indicators referring to security requirements has been developed for fine-tuning.

- Reinforcement studying improves the mannequin utilizing reward indicators related to security compliance.

Outcomes:

- The o3 mannequin outperformed the GPT-4o and different state-of-the-art fashions on inside and exterior security requirements.

- A major enchancment was famous in avoiding dangerous outcomes whereas permitting benign reactions.

Early entry and analysis alternatives

The primary model of the o3 mannequin can be launched in early 2025. OpenAI invitations security and safety researchers Apply For early entry, with functions closing on 10 January 2025. Chosen researchers can be notified shortly.

Program individuals will:

- Create new assessments to evaluate AI capabilities and dangers.

- Develop managed demonstrations for potential high-risk situations.

- Contribute insights to OpenAI’s safety framework.

Deal with AI security analysis.

OpenAI continues to prioritize safety analysis as reasoning fashions change into more and more refined. This initiative is in keeping with ongoing collaboration with organizations such because the US and UK AI Security Institute, guaranteeing that developments in AI stay secure and useful.

…………………………………………

DYNAMIC ONLINE STORE

A complimentary subscription to remain knowledgeable concerning the newest developments in.

DYNAMICONLINESTORE.COM

Leave a Reply